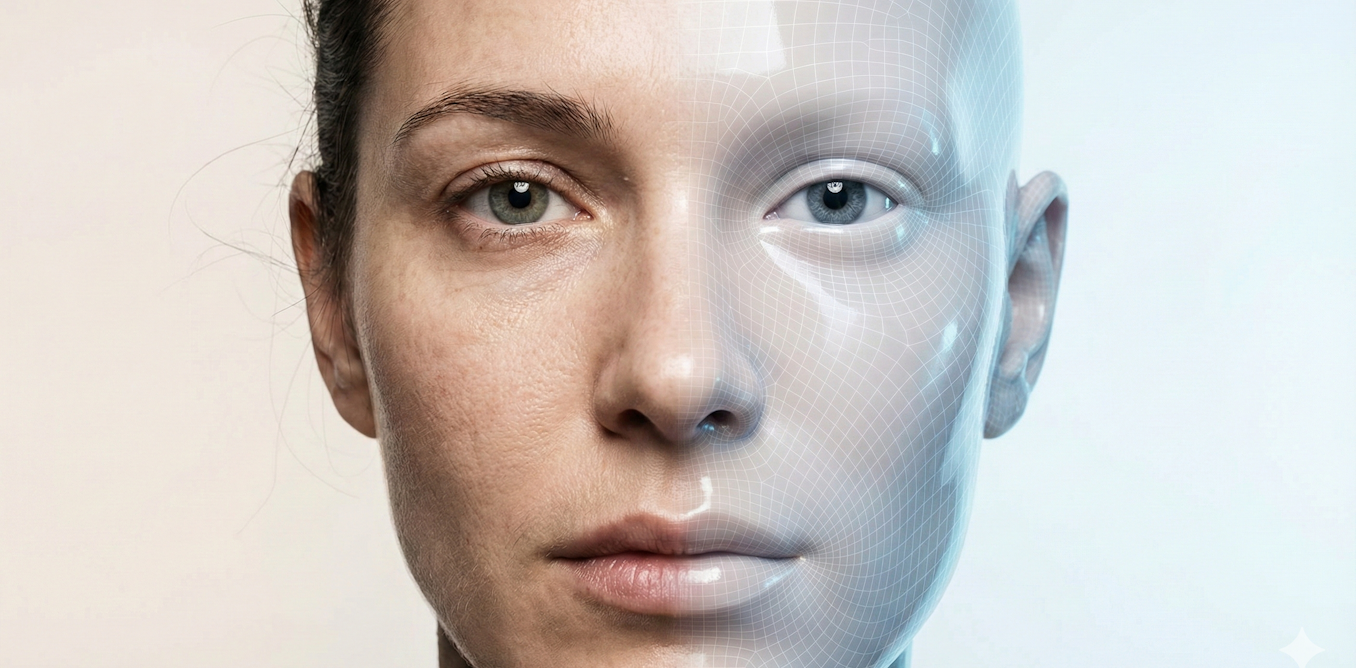

The Deepfake Revolution: How AI is Blurring Reality and What You Need to Know

The world of video is undergoing a seismic shift.Thanks to breakthroughs in artificial intelligence, creating realistic audio-visual content is no longer limited to professionals wiht expensive equipment. Tools like OpenAI’s Sora 2 and google’s Veo 3, alongside a surge of innovative startups, empower anyone to bring their ideas to life with astonishing speed and polish.

You can now describe a scene, have a large language model like ChatGPT or Gemini write a script, and generate compelling video – all in minutes. AI agents are even automating the entire process. This means the ability to create convincing, storyline-driven deepfakes is now widely accessible.

The Growing Threat of Deepfakes

This rapid increase in quantity,combined with the near-perfect impersonation of real people,presents significant challenges. Detecting deepfakes is becoming increasingly challenging, especially in today’s fast-paced media landscape where attention is fragmented and verification lags behind.

We’re already seeing the real-world consequences:

* Misinformation: False narratives spread rapidly, impacting public opinion. Recent examples include AI-generated deepfakes of doctors spreading inaccurate health details on social media (as reported by The Guardian).

* Targeted harassment: Individuals are being subjected to malicious and damaging deepfake content.

* Financial Scams: Complex scams leveraging deepfakes are defrauding individuals and organizations (Wired details the rise of these scams).

* Political Manipulation: Deepfakes pose a threat to democratic processes by potentially influencing elections and eroding trust in institutions (Congressional testimony highlights these concerns).

The future is Now: Real-Time Deepfakes

The evolution isn’t slowing down.Over the next year, we can expect deepfakes to move towards real-time synthesis. This means videos will be generated that accurately mimic the subtle nuances of human appearance and behavior, making detection even harder.

The focus is shifting from simply creating visually realistic images to achieving temporal and behavioral coherence. Models are now being developed to:

* Generate live or near-live content, rather than pre-rendered clips (research details this progression).

* Capture not just how a person looks, but how they move, sound, and speak in different situations (recent advancements in identity modeling).

Imagine entire video call participants being synthesized in real-time, or AI-driven avatars responding dynamically to prompts. Scammers will likely deploy these responsive avatars instead of relying on static deepfake videos.

Beyond Pixel-Peeping: A New Defense Strategy

As the line between synthetic and authentic media blurs, relying on human judgment alone will become insufficient. We need to shift our focus to infrastructure-level protections.This includes:

* Secure Provenance: Using cryptographic signatures to verify the origin and authenticity of media. The Coalition for Content Provenance and Authenticity (C2PA) is leading the charge in developing these standards.

* **Multimodal Forensic

![Beloved Comedian & TV Actor Dies at 67 | Remembering [Actor’s Name] Beloved Comedian & TV Actor Dies at 67 | Remembering [Actor’s Name]](https://i0.wp.com/www.tvinsider.com/wp-content/uploads/2026/01/John-Mulrooney-1342x798.jpg?resize=150%2C100&ssl=1)