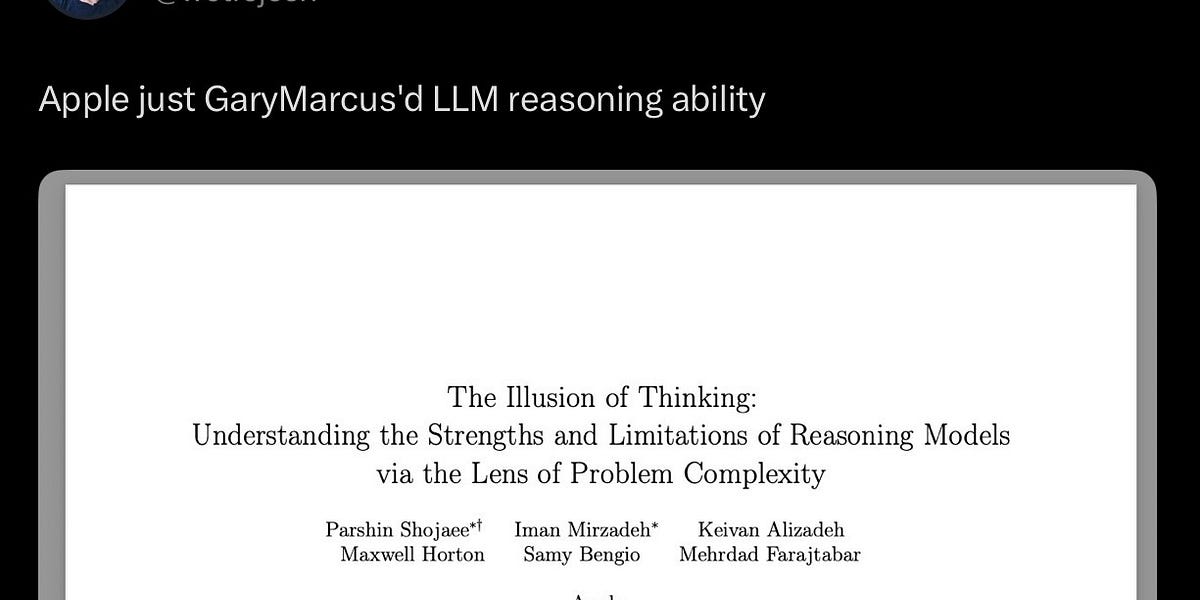

Large language models (LLMs) are generating considerable buzz, but recent performance hiccups are revealing fundamental limitations. Its becoming increasingly clear that current LLM approaches fall far short of achieving artificial general intelligence (AGI).

These models excel at specific tasks-generating text, translating languages, and answering questions based on existing data-but struggle with true understanding and reasoning. You might have noticed inconsistencies, factual errors, and a general lack of common sense in their responses.

Here’s a breakdown of why the current paradigm isn’t delivering on the AGI promise:

* Superficial Learning: LLMs primarily learn statistical relationships between words, not genuine comprehension of concepts.

* Lack of Real-World Grounding: They operate in a purely textual realm, disconnected from the physical world and embodied experience.

* Brittle Reasoning: Even slight variations in input can lead too dramatically incorrect outputs, demonstrating a lack of robust reasoning abilities.

* Inability to Handle Novelty: LLMs struggle with situations outside their training data, lacking the adaptability of human intelligence.

I’ve found that relying solely on scaling up these models-increasing their size and training data-isn’t a viable path to AGI.A different strategy is needed, one that focuses on incorporating more robust cognitive architectures.

This option approach emphasizes:

* Causal Reasoning: Understanding cause-and-effect relationships, not just correlations.

* Symbolic Representation: Using symbols to represent concepts and relationships, enabling more abstract thought.

* Knowledge Integration: Combining different types of knowledge-factual, procedural, and experiential.

* Continual Learning: Adapting and improving over time, rather than being limited by a fixed training dataset.

The path to AGI is complex and requires a fundamental shift in how we approach artificial intelligence. It’s time to move beyond the hype and focus on building systems that truly understand, reason, and learn like humans do.

Don’t be misled by the current limitations. A more thoughtful and integrated approach is essential for unlocking the full potential of AI.