Beyond Bigger Models: Building Trustworthy AI for HealthcareS future

The hype around large language models (LLMs) is undeniable, but in healthcare, simply deploying a bigger model isn’t the answer. Truly impactful clinical AI demands a fundamental shift in focus – from models themselves to the robust infrastructure surrounding them. this article explores why, and outlines the key elements for building AI systems that clinicians can confidently rely on.

The high Stakes of Context in Clinical AI

Healthcare is a domain where precision is paramount. A slight misinterpretation of patient data, a forgotten nuance in medical history, or a reliance on outdated information can have serious consequences. Consider these critical points:

Context is king. LLMs, while powerful, are susceptible to “hallucinations” – generating plausible but incorrect information.

The “I don’t know” problem is crucial. A model confidently providing a wrong answer is far more dangerous than one that admits uncertainty.

Agentic AI amplifies errors. As AI systems move beyond analysis to action – issuing alerts, suggesting treatments, or ordering tests – the impact of contextual errors dramatically increases.

Thus, success in healthcare AI won’t be about who builds the largest model, but who builds the smartest systems around them.

The Four Pillars of Reliable Clinical AI

To navigate these challenges, a new roadmap is needed. We must prioritize building systems that are not only clever but also demonstrably safe and trustworthy. This means focusing on:

- Retrieval contracts: Ensuring the AI consistently accesses and utilizes the correct, relevant information.

- Safety scaffolds: Implementing guardrails and checks to prevent the AI from making perhaps harmful recommendations.

- Feedback-aware pipelines: Designing systems that learn from every interaction, continuously improving accuracy and reliability.

- Auditable and validated output chains: Providing a clear, traceable record of how the AI arrived at it’s conclusions, allowing for thorough review and validation.

A Pipeline-First Approach to Healthcare AI

This roadmap isn’t just theoretical. it’s guiding a new generation of healthcare AI development. A successful strategy includes:

Robust medical data infrastructure: Establishing a solid foundation for accessing, managing, and securing patient data.

pipeline-first thinking: Prioritizing the entire workflow, from data input to output validation, rather than solely focusing on the model.

Modular agents with verifiable output: Building AI components that are self-reliant, testable, and capable of explaining their reasoning.

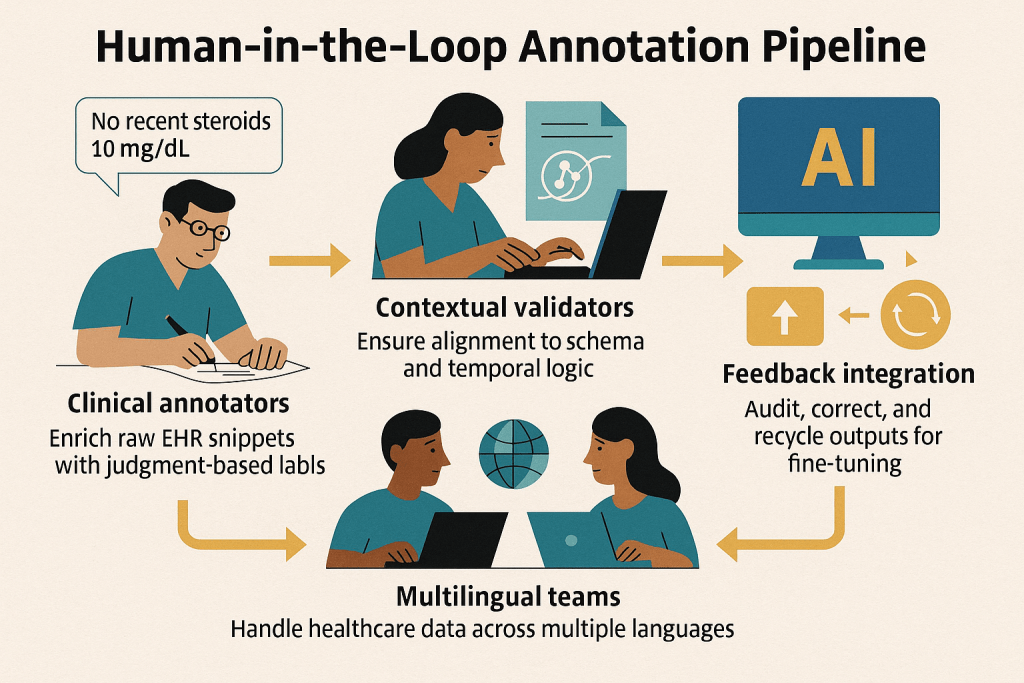

Collaborative platforms with human-in-the-loop (HITL) oversight: Integrating human expertise into the process, allowing clinicians to review, refine, and validate AI-driven insights.

Infrastructure: The Foundation of Trustworthy AI

Building better models is vital,but it’s only one piece of the puzzle. The real challenge lies in creating trustworthy systems around those models. These systems must validate information, trace the AI’s reasoning, and flag any uncertainty.

We are focused on delivering AI agents that not only operate safely but also explain their logic, cite their data sources, and defer judgment when ambiguity exists. This is the standard clinical-grade AI demands, and it’s the core principle guiding our platform’s design.

Let’s Collaborate on the Future of Clinical AI

We’re actively seeking partners who share our vision for responsible and impactful AI in healthcare. If you’re working on any of the following, we’d love to connect:

Retrieval-augmented agents

LLM orchestration within Electronic Health Records (EHRs)

Multilingual medical Natural Language Processing (NLP)

* Human-in-the-loop data validation at scale

Let’s build a future where AI empowers clinicians, improves patient outcomes, and transforms healthcare for the better.

![Tuesday News: Latest Updates & Headlines – [Date] Tuesday News: Latest Updates & Headlines – [Date]](https://assets.thelocal.com/cdn-cgi/rs:fit:1200/quality:75/plain/https://apiwp.thelocal.com/wp-content/uploads/2025/12/watermarks-logo-low-angle-tQAOPzmAFfc-unsplash.jpeg@webp)