Enhancing teh Reliability of AI in Medical Diagnosis: Reporting Guidelines

Artificial intelligence (AI) is rapidly transforming healthcare, particularly in the realm of diagnostics. Though,ensuring the accuracy and trustworthiness of AI-driven diagnostic tools is paramount. Here’s a look at the crucial development of reporting guidelines designed to achieve just that.

The Need for Clear Standards

Previously, evaluating diagnostic accuracy lacked standardized reporting. This made it tough to compare studies and assess the true value of AI interventions. You need consistent, transparent reporting to build confidence in these technologies.

Introducing STARD-AI: A Framework for Clarity

To address this gap, a dedicated framework – STARD-AI – emerged. It’s designed specifically for diagnostic accuracy studies involving AI. I’ve found that clear guidelines are essential for researchers and clinicians alike.

What Does STARD-AI Cover?

This guideline focuses on ensuring comprehensive reporting across several key areas:

* Study Design: Detailed descriptions of how the study was conducted, including the AI model used and the data it was trained on.

* Data Handling: Transparent reporting of data sources, preprocessing steps, and potential biases.

* Performance Metrics: Clear definitions and reporting of key performance indicators, such as sensitivity, specificity, and accuracy.

* Clinical Impact: Assessment of how the AI intervention might affect patient care and outcomes.

* Technical Details: Comprehensive facts about the AI model, including its architecture, training process, and validation methods.

Why is STARD-AI Importent for You?

As a healthcare professional,you can benefit from STARD-AI in several ways:

* Improved Evaluation: It allows you to critically assess the validity and reliability of AI diagnostic tools.

* Informed Decision-Making: You can make more informed decisions about integrating AI into your practice.

* Enhanced Patient Safety: By promoting rigorous evaluation, STARD-AI ultimately contributes to safer and more effective patient care.

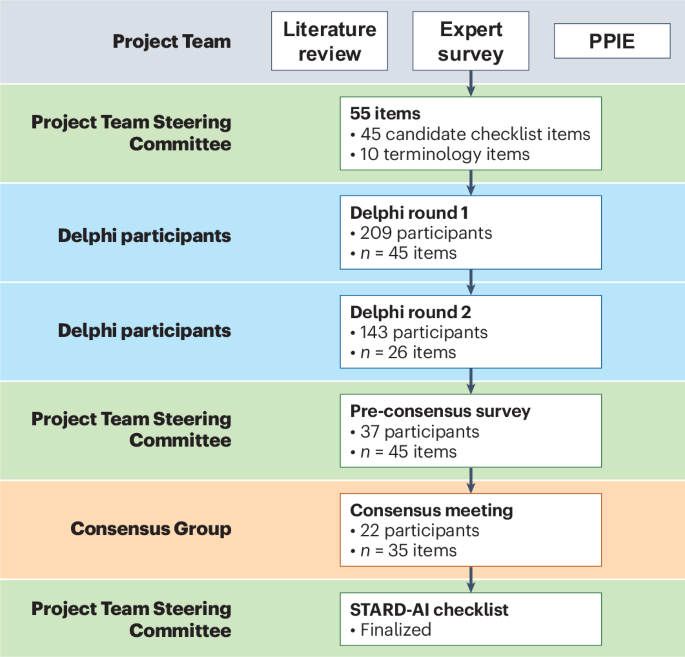

The Evolution of the Guidelines

The initial focus was on developing specific reporting guidelines for diagnostic accuracy studies. This led to a more comprehensive protocol for artificial intelligence-centred diagnostic test accuracy studies. Here’s what’s been refined:

* Expanded Scope: The guidelines now encompass a broader range of AI applications in diagnostics.

* Greater Detail: More detailed guidance is provided on specific reporting items.

* Increased Accessibility: Resources are available to help researchers and clinicians implement the guidelines effectively.

Ultimately, STARD-AI represents a significant step forward in ensuring the responsible and reliable implementation of AI in medical diagnosis. It’s about building trust, promoting transparency, and ultimately, improving patient outcomes.