The Rise of Deepfakes & The Surprisingly Vulnerable Human Eye: can super-Recognizers Spot the fakes?

The digital world is rapidly evolving, and with it, the sophistication of artificial intelligence. One particularly concerning progress is the rise of deepfakes – hyperrealistic, AI-generated images and videos that can convincingly mimic real people. This poses a meaningful threat to trust, security, and even democratic processes. But how good are we at spotting these increasingly convincing forgeries? Surprisingly, even individuals with exceptional facial recognition skills – frequently enough dubbed “super-recognizers” – struggle with this task, according to recent research.

As a specialist in the intersection of human perception and emerging technologies, I’ve been closely following the advancements in both AI-driven image generation and the cognitive science behind how we recognize faces.This new study, lead by Dr. Heather Gray and her team, sheds crucial light on a critical vulnerability in our ability to discern reality from fabrication.

Who are Super-Recognizers, and Why Should We Care?

Super-recognizers are individuals possessing an extraordinary ability to learn and remember faces. they consistently outperform the average person in facial recognition tasks, often achieving accuracy rates far exceeding 90%. These individuals are highly sought after in law enforcement and security roles, where accurate identification is paramount.You can even find a database of verified super-recognizers at the Greenwich Face and voice Recognition Laboratory.

Given their exceptional skills, itS logical to assume super-recognizers would be adept at identifying subtle inconsistencies in AI-generated faces. However, the initial findings of Gray’s research challenge this assumption.

The experiment: Super-Recognizers vs. Deepfakes

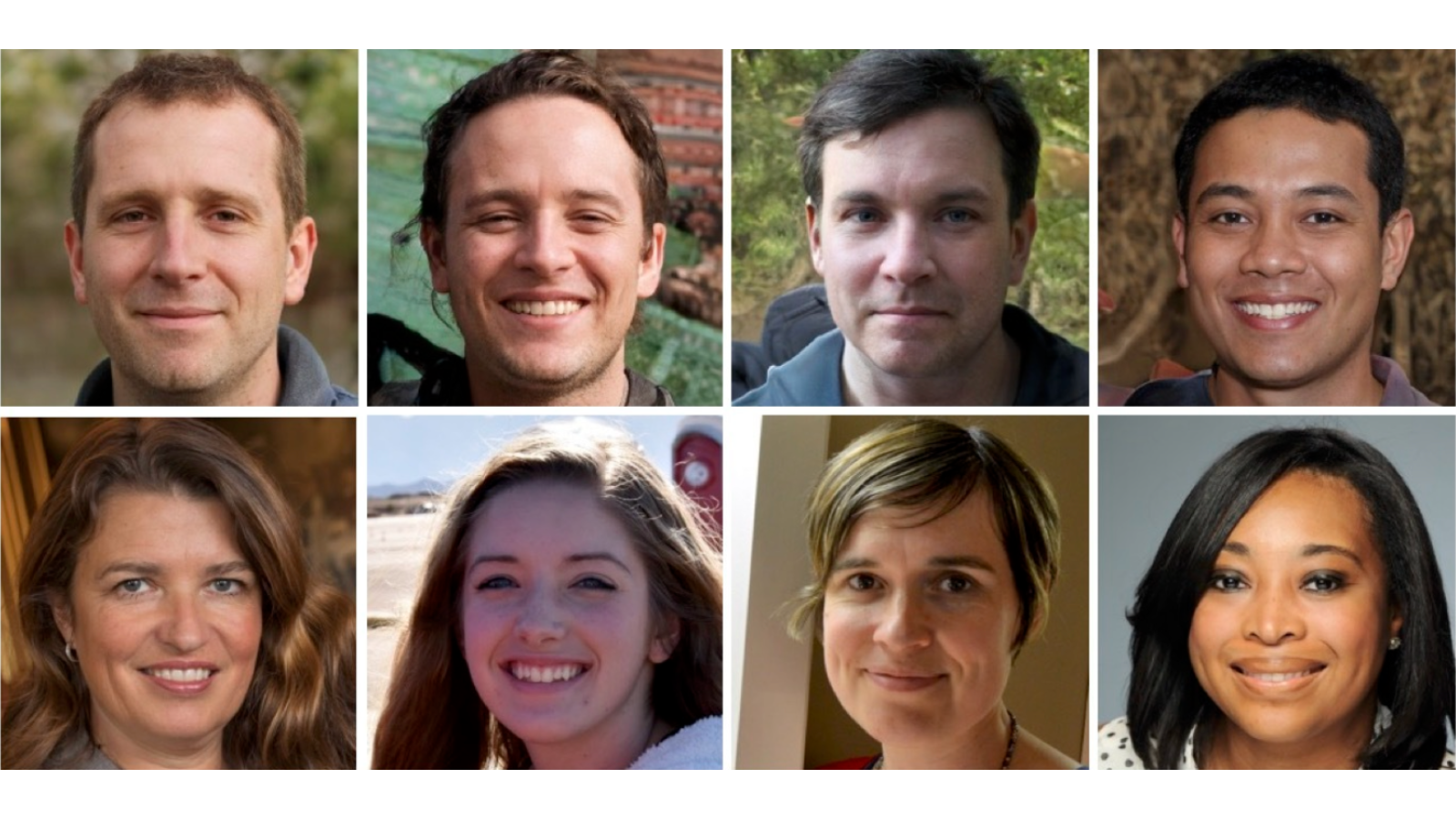

the study, published in i-Perception, compared the performance of super-recognizers (those scoring in the top 2% on facial recognition tests) with a control group of “typical” recognizers. Participants were presented with images - some real,some generated by AI – and tasked with determining authenticity within a 10-second timeframe.

The results were startling. Super-recognizers correctly identified only 41% of the AI-generated faces, barely better than random guessing. Typical recognizers fared only slightly worse, correctly identifying around 30% of the fakes. Interestingly, both groups also exhibited a tendency to incorrectly identify real faces as fake, with super-recognizers making this error 39% of the time, and typical recognizers 46%.

Can Training bridge the Gap? A Promising, But Preliminary, Answer.

Recognizing the need to improve detection rates, Gray’s team implemented a brief, five-minute training session. Participants were shown examples of common errors found in AI-generated faces – things like asymmetrical features, unnatural lighting, or inconsistencies in skin texture. They received real-time feedback on their accuracy, culminating in a recap of key “red flags” to watch for.

The training proved remarkably effective. Super-recognizers’ accuracy jumped to 64%, while typical recognizers reached 51%. Though, the tendency to misidentify real faces as fake remained relatively consistent, suggesting that training primarily improves the ability to detect fakes, rather than reducing false positives.

The Importance of Slowing Down: A Key Takeaway

A fascinating observation emerged from the data: trained participants took significantly longer to scrutinize the images. Typical recognizers slowed down by approximately 1.9 seconds, while super-recognizers increased their inspection time by 1.2 seconds.

This highlights a crucial point: detection requires deliberate, focused attention. In a world of information overload, we often rely on quick, intuitive judgments.When assessing the authenticity of a face, especially online, it’s vital to slow down and carefully examine the details. Look for subtle anomalies, inconsistencies in lighting, and unnatural textures.

Caveats and Future Research: A Word of caution

While the training results are encouraging, it’s important to acknowledge the study’s limitations. As noted by Dr. Meike Ramon,a leading expert in face processing at the Bern University of Applied Sciences,the training effect wasn’t re-tested,leaving open the question of long-term retention. Furthermore, the use of separate participant groups for the pre- and post-training experiments makes it difficult to determine the extent to which training improves an individual’s skills. Ideally, future research will involve testing the same individuals before and after training to isolate the impact of the

![Etna Eruption: Skiers on Sicilian Volcano as It Spews Lava & Ash | [Year] Update Etna Eruption: Skiers on Sicilian Volcano as It Spews Lava & Ash | [Year] Update](https://i0.wp.com/ichef.bbci.co.uk/news/1024/branded_news/999d/live/9afdf7e0-e38b-11f0-aae2-2191c0e48a3b.jpg?resize=150%2C100&ssl=1)