The Rise of Neural Processing Units in Windows: A New Era of On-Device AI

The landscape of personal computing is undergoing a important conversion, driven by the increasing capabilities of artificial intelligence (AI). Microsoft is at the forefront of this evolution, shifting towards a model where more AI processing happens directly on your device, rather than relying solely on the cloud. This isn’t simply about faster performance; it’s about a fundamental change in how Windows operates, prioritizing privacy, efficiency, and responsiveness. As of October 7, 2025, this shift is gaining momentum with the integration of dedicated Neural Processing Units (NPUs) into Windows, promising a smarter, more intuitive user experience. This article delves into the implications of this technology, exploring its origins, benefits, and future potential.

The Genesis of On-Device AI at Microsoft

Microsoft’s journey into on-device AI didn’t begin with Windows 11; it started with the Surface Hub 2 Smart Camera.Introduced several years ago, this innovative device utilized specialized hardware to execute sophisticated AI tasks locally. These included intelligent features like automatic outlook correction and dynamic video reframing. The key innovation was performing these computationally intensive operations without sending data to the cloud. This approach offered immediate benefits: reduced latency, enhanced privacy, and freed up system resources.

This early success demonstrated the power of purpose-built AI hardware.By offloading AI workloads to dedicated processors, the central processing unit (CPU) and graphics processing unit (GPU) could focus on other tasks, resulting in a smoother, more efficient overall experience. This concept, initially applied to a collaborative conferencing device, is now being extended to the entire Windows ecosystem.

Understanding Neural Processing Units (NPUs)

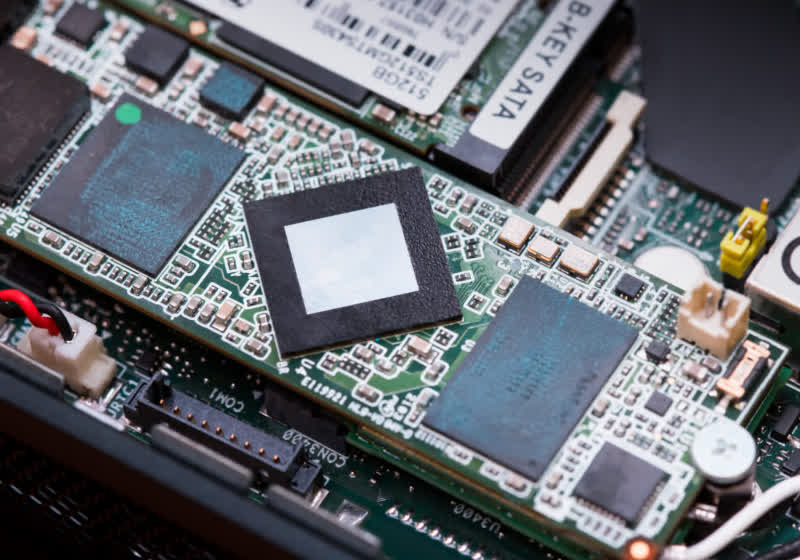

NPUs are specialized hardware accelerators designed specifically for machine learning tasks. Unlike CPUs, which are general-purpose processors, and GPUs, which excel at parallel processing for graphics, NPUs are optimized for the matrix multiplications and other operations that form the core of neural networks. This specialization translates into significant performance gains and energy efficiency when running AI models.

Here’s a breakdown of the key advantages of NPUs:

* Increased Speed: NPUs dramatically accelerate AI tasks, making features like real-time translation, image recognition, and voice control much faster and more responsive.

* Improved Efficiency: By handling AI workloads independently, NPUs reduce the strain on the CPU and GPU, leading to lower power consumption and longer battery life.

* Enhanced Privacy: Processing data locally on the device minimizes the need to send sensitive information to the cloud, bolstering user privacy.

* Offline Functionality: AI-powered features can continue to function even without an internet connection.

Windows and the Future of On-Device AI

Microsoft’s commitment to NPUs in Windows represents a strategic shift towards a more intelligent and personalized computing experience. The company is actively working with hardware partners to integrate NPUs into a wide range of devices, from laptops and desktops to tablets and 2-in-1s.

Recent advancements,such as Qualcomm’s Snapdragon X Elite platform (announced in October 2024),showcase the potential of NPUs. These platforms boast dedicated NPUs capable of delivering over 40 TOPS of performance, enabling features like advanced noise cancellation, intelligent camera enhancements, and real-time language translation.

The implications for Windows are far-reaching. We can expect to see:

* Smarter Applications: Software developers will be able to leverage the power of NPUs to create more intelligent and responsive applications.

* Enhanced Security: AI-powered security